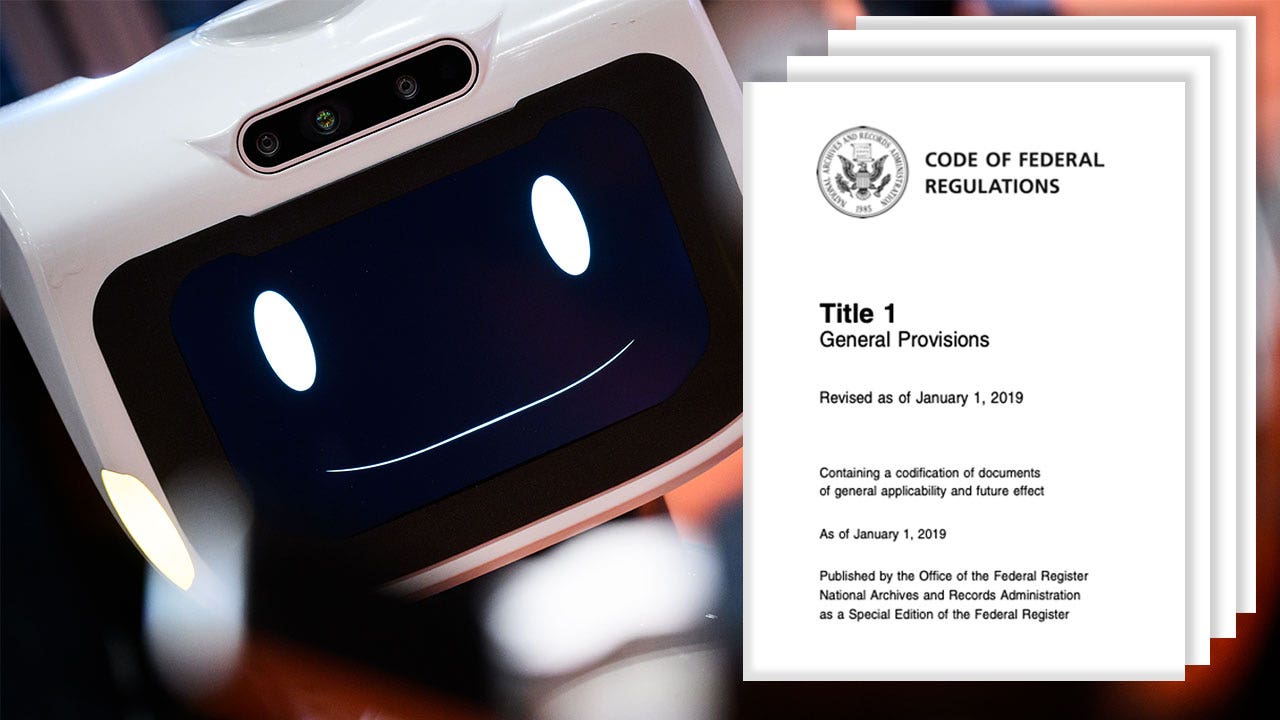

A federal agency is pondering whether artificial intelligence might someday be used to help the government identify duplicative or overly burdensome federal rules that need to be cut back.

But officials are already hearing from skeptics who doubt AI will ever be powerful enough to wade through and understand the hundreds of thousands of pages of detailed federal rules.

The Administrative Conference of the United States (ACUS) is an independent federal agency that works to increase the efficiency and fairness of regulations. In early May, ACUS released a report it commissioned on how AI and other algorithmic tools might be used to conduct retrospective reviews of federal rules to improve them.

That report said AI might already be able to conduct ‘housekeeping’ chores, such as finding typos or incorrect citations but said AI might also be trained to do much more.

‘A tool could identify regulations that are either outdated or redundant with another rule,’ the report said, even as it acknowledged the challenges of this work. ‘Both of these inquiries may not have an objective correct answer: A regulation might be old, but is it obsolete? A rule’s text or regulated activity might overlap with another’s, but is one superfluous?

‘An algorithm performing even more substantive tasks might identify existing regulations it believes could benefit from clarification or are overly burdensome,’ it added. ‘These are both arguably completely subjective inquiries: When is a regulation too complex or burdensome?’

The report quoted several federal staffers, most of whom were open to using AI for retrospective regulatory reviews.

But it also quoted groups affected by federal regulations, and they were much more tentative on how AI might be used. Most said that, at best, AI would be a tool for flagging rules that are ready to be reviewed, after which people would have to do the reviewing.

Two ‘skeptics’ in this group argued that AI would have trouble penetrating the dense language of federal rules to be useful in any way.

‘According to the skeptics, it would be nearly impossible to write an all-encompassing algorithm that would be accurate to flag rules in need of review given that so much of regulatory text is incredibly difficult to unpack and is so context-specific,’ the report said.

‘The concern raised was that so much of the regulatory quality depends on agency expertise and experience. The representatives were skeptical that AI could replace or even channel this.’

The report said another possible hurdle is whether government officials will want to use AI this way. The Trump administration introduced the use of AI in the regulatory process as a deregulatory tool in the Department of Health and Human Services and used AI in the Defense Department to help people understand the Pentagon’s vast network of rules.

The two ‘skeptics’ said the Trump administration’s use of AI may have ‘poisoned the well’ and could make it difficult for agencies to agree to explore AI further.

‘One representative went further to elaborate that it ‘soured the community’s thinking about retrospective review’ given that it used retrospective review ‘as a smokescreen for partisan objectives,’’the report said.

Several federal agencies are represented in ACUS, and the group’s research director, Jeremy Graboyes, told Fox News Digital there is some hesitancy inside the government on how to use AI.

‘I think you probably see a range of emotions,’ Graboyes said, noting that the positives of cost-effectiveness and accuracy are potentially outweighed by problems like possible bias that can be built into AI systems. ‘All the debates you’re seeing outside the government, you’re seeing inside government as well.’

ACUS is recommending that federal agencies start by using AI to identify redundant federal rules and fixing small errors and by using open-source AI tools for this work. ACUS also said agencies should disclose when they use AI or other algorithmic tools in the process of reviewing regulations.

ACUS is set to meet in mid-June in Washington to decide whether to adopt the report and its recommendations. From there, Graboyes said ACUS would be working with federal agencies on implementing these recommendations, which he said was a start that could lead the agency to recommend more advanced work with AI tools in the years ahead.

‘We’ll be doing more work in the AI space,’ he said.