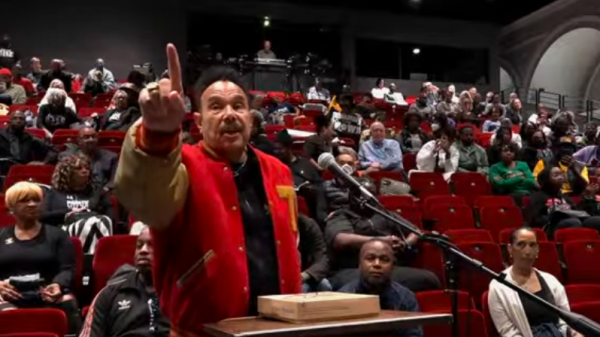

OpenAI CEO Sam Altman said people should not try to ‘anthropomorphize’ artificial intelligence and should discuss the powerful tech systems in the context of it being a ‘tool’ and not a ‘creature.’

‘I think there’s a huge amount of speculation on that question,’ Altman told reporters Tuesday on Capitol Hill when asked how quickly AI could become ‘self-aware’ if Congress does not regulate the technology.

The line of questioning had echoes of the ‘Terminator’ film series, in which AI brings about the apocalypse on the day it becomes ‘self-aware.’

‘I think it’s very important that we keep talking about this as a tool, not a creature, because it’s so tempting to anthropomorphize it,’ he added. ‘I totally understand where the anxiety comes from. I think it’s the wrong frame … the wrong way to think about it.’

Altman appeared before the Senate Judiciary Subcommittee on Privacy, Technology, and the Law Tuesday morning to discuss potential avenues on how to regulate artificial intelligence and acknowledging threats the powerful technology could pose to the world.

‘As this technology advances, we understand that people are anxious about how it could change the way we live, Altman told the lawmakers. ‘We are too. But we believe that we can and must work together to identify and manage the potential downsides so that we can all enjoy the tremendous upsides.

‘We think that regulatory intervention by governments will be critical to mitigate the risks of increasingly powerful models.’

OpenAI is the artificial intelligence lab that released the wildly popular chatbot, ChatGPT, last year. The chatbot is able to mimic human conversation after it is given prompts by human users. Following the release of the technology, other companies in Silicon Valley and across the world launched a race to build more powerful artificial intelligence systems.

Altman added Tuesday that his greatest fear amid his company’s work is that the technology could cause major harmful disruptions for people.

‘My worst fears are that we cause significant — we, the field, the technology industry — cause significant harm to the world,’ Altman said. ‘I think that could happen in a lot of different ways. It’s why we started the company.’

‘It think if this technology goes wrong, it can go quite wrong, and we want to be vocal about that,’ he added. ‘We want to work with the government to prevent that from happening.’

Following the hearing, Altman provided two examples to Fox News Digital of ‘scary AI,’ noting that the technology ‘can become quite powerful.’

‘An AI that could design novel biological pathogens,’ he said of ‘scary AI’ examples. ‘An AI that could hack into computer systems. I think these are all scary.’